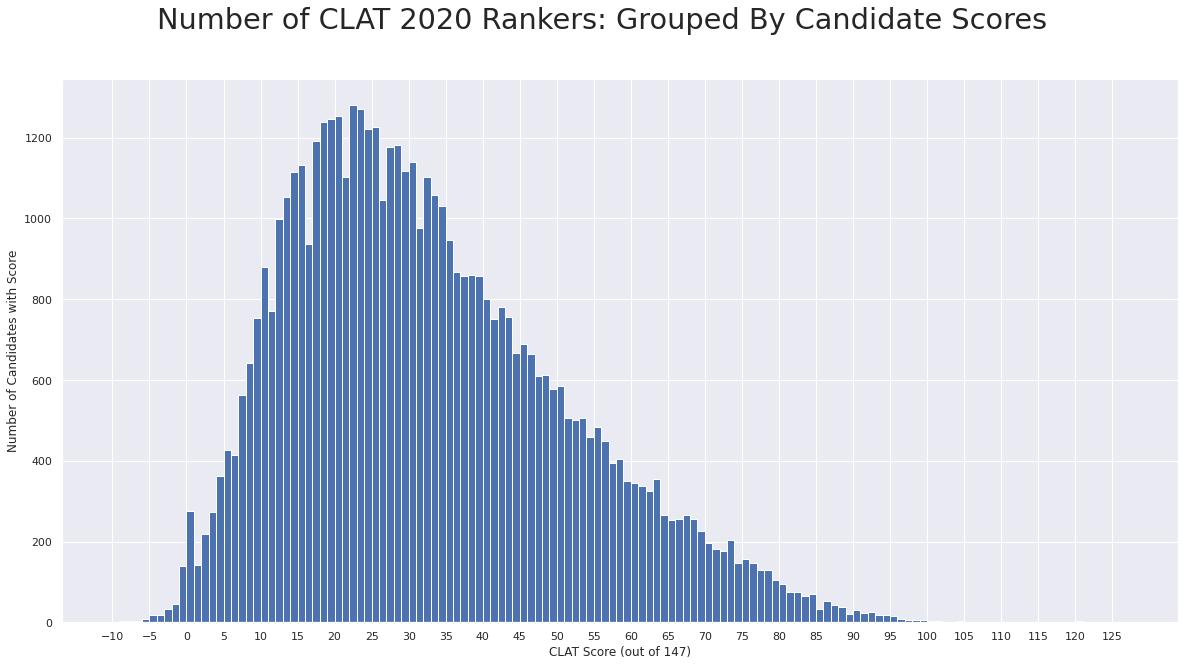

The Common Law Admission Test (CLAT) has released the consolidated merit list including the results of 53,226 candidates today, amongst whom a whopping 25% (around 13,300 candidates) scored fewer than 18.5 points (equivalent to 13% out of the 147 maximum points available in the 2020 CLAT).

Our histogram chart above illustrates the number of candidates who achieved scores, rounding down to the nearest whole number. The data comes from the CLAT 2020 All India rank list of scores, which was released on the CLAT website yesterday (view as PDF / cleaned text CSV file, if you want to do your own data crunching).

At first glance it may appear like a standard bell curve, which most standardised tests generally aim to target in order to produce more reliable outcomes; however, 2020’s CLAT score distribution is actually asymmetric and skews very heavily to the left, with the majority of scores clustered at the low end.

The average (mean) score amongst all candidates across the board was 32.7 out of 147 possible maximum points.

At the top end, the All India Rank (AIR) top score of 127.25 was nearly 7 points ahead of the second- and third-ranked at 120.25.

But only a total of 19 candidates scored scores of 100 or higher.

None of the below analysis decisively proves that there was a technical issue with the 2020 CLAT, though it would explain why quite a few candidates were vocal and unhappy about the results. And it might also explain the Supreme Court challenge.

Update 23:57: We have reported that the Supreme Court case will be heard on Friday before the same bench that heard the National Law Aptitude Test (NLAT) petition. Senior advocate Gopal Sankaranarayanan, who had also appeared in the NLAT case, has been instructed on behalf of the petitioner.

It does also raise questions that the CLAT consortium might do well to have answers for, in the interest of maintaining transparency.

Update 13:19: CLAT convenor Prof Balraj Chauhan has released a statement:

This only shows that the candidates probably did not put in the requisite effort in preparing for CLAT. This could be either due to COVID-19 or postponements. He asserted that even in JEE the performance of candidates this year is not at the desired level that’s why the minimum cut off has been reduced for the general category from 35 percent to 17.5 percent. If JEE has to halve it’s minimum marks by half it means we are in an extraordinary year and CLAT cannot be criticised on this ground.

He went on to say that some candidates are deliberately spreading misinformation and participating in malicious campaign. For instance one candidate reached out to us that he had score of 115.75 but final score card shows just 01. We checked record of this candidate and it never showed 115.75. He got only one mark as per response sheet as well as scorecard. This candidate did not even visit 65 questions and not attempted 85. Another candidate got zero because she marked all questions for review.

Chauhan also clarified that candidates should not object to exam as they were familiarised with the pattern in sample tests and mocks. They were familiarised with the platform and software.

Negative marking into sub-zero territory

444 out of 53,226 candidates on the released rank list scored 0 points or even fewer, out of whom:

- 270 scored negative marks below 0, as low as -9.5, and

- 174 candidates scored exactly 0 points, as visualised in the graph in the bar chart’s bump at that point.

50% scored below 44.5 points

In fact, most of the scores clustered right at the very bottom of the score board:

- 10% of candidates (around 5,300) scored 11 marks or fewer.

- 25% (around 13,300) scored 18.5 marks or below.

- 50% (around 26,600) scored at or below 29.75.

- 75% (around 39,900) scored at or below 44.5.

- 90% (around 47,900) scored at or below 59.5.

Only 3% picked up more than 50% of available points

Scores above 50% out of 147 total marks available were very very rare this year:

- only 5% of candidates (around 2,661) scored more than 68.5 points (46% of total 147 points available).

- 3% of candidates (around 1,600) scored above the 73.75 mark (around 50% of total points).

- only 1% (around 532) managed to score more than 82.25 (coming to around 56% correct answers), as illustrated in the graph below.

- a tiny total of 19 candidates (around 0.04%) scored 100 or above, ranging from 100.25 to 127.5 points at the top.

Q: How can low across-the-board scores be explained?

There are several possible explanations, though all of these remain theories in the absence of CLAT’s formal confirmation of figures of complaints received and perhaps a more detailed confirmation of how the system worked.

a) Super hard?

The first and most obvious option is that the CLAT was very, very hard this year and nearly impossible to complete.

That seems to be have been borne out by accounts of the 2020 CLAT’s difficulty by students and CLAT coaches.

Perhaps kids this year simply did really badly at a very hard CLAT or the standards are falling, exacerbated by negative marking for incorrect answers, a somewhat late change in the test pattern to only 150 questions in 120 minutes (down from 200), with an emphasis on English reading comprehension, and coupled with Coronavirus-induced stress at physical exam centres using novel online software.

Having such a hard test that it skews the bell curve very hard to the left is not ideal in standardised testing, which generally aim for an equal number of candidates to perform above and below the mean, in order to allow for a clear gradation of performance.

That said, while undesirable, an ultra-hard test would not be fatal to the exam or its results.

b) The scores are often this low?

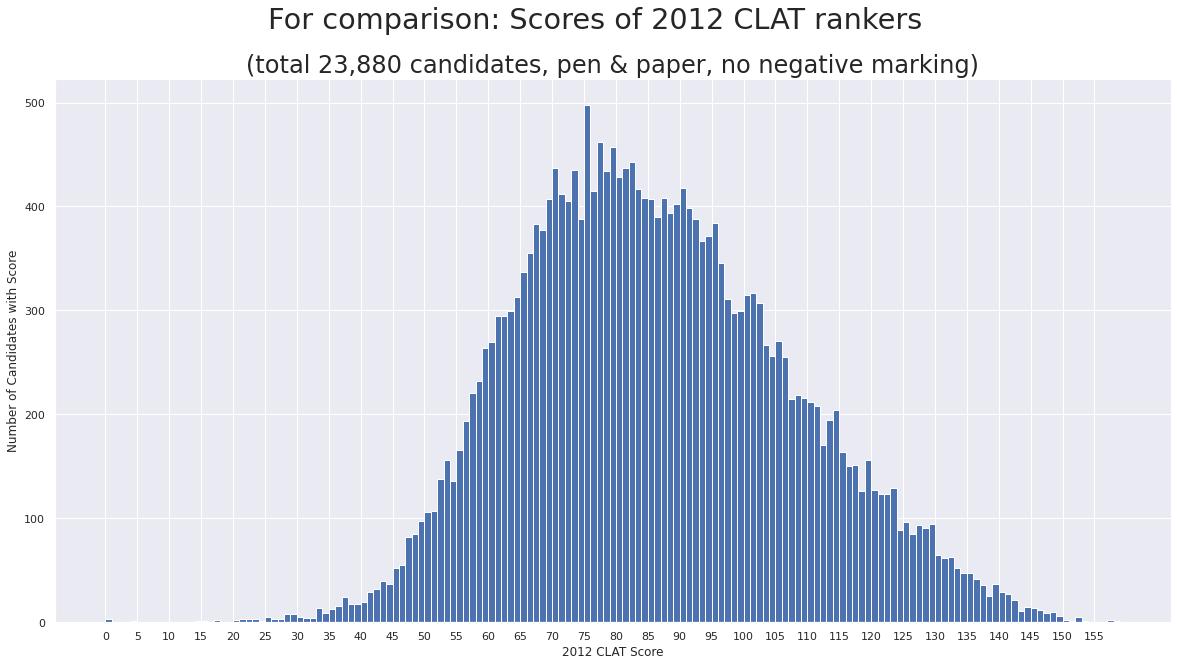

It is possible that CLAT scores are often clustered at the lower end, though prima facie evidence points against that.

While we do not have access to more recent CLAT scores data, we have been able to dig out the 2012 CLAT’s scores and compute the distribution of scores (see graph below - if you have access to any more recent CLAT scores for all candidates, we would be grateful if you could share these with us).

In 2012, the mean score was 85 and: (i) 25% scored 70 or below, (ii) 50% scored 84 or below, and (iii) 75% scored 100 or below.

The bell curve is nearly symmetrical along the central average and much more evenly spread out, as one might expect with a well-designed exam.

However, some provisos are required: the 2012 CLAT was a very different exam from the modern CLAT, with a different pattern and probably much easier questions. It had only 23,880 candidates in the merit list. It also had no negative marking. And it was a pen and paper exam.

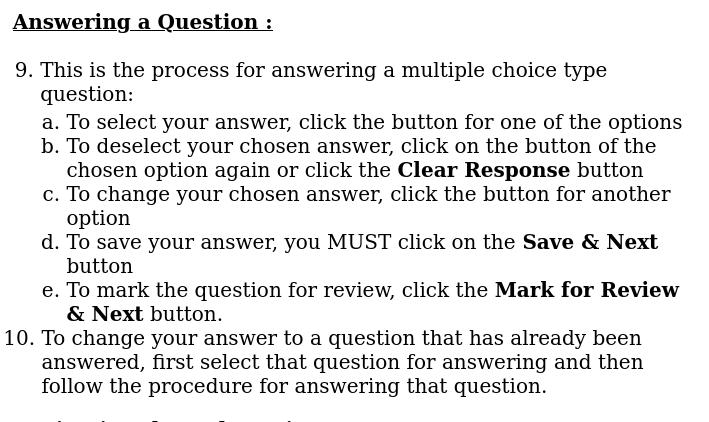

c) Mark for review?

However, some of 2020’s very low scores might also be indicative of some candidates who (mis)-used the “mark for review” functionality that we had reported on 1 October, shortly after the final exam.

“Mark for review” in the 2020 CLAT and its five mock tests effectively cleared and reset an answer rather than simply bookmarking it for a possible later look.

However, this arguably unconventional functionality was fairly clearly explained in the CLAT and the mock exam instructions.

We don’t currently know how many candidates used the mark for review function wrongly, though we have had reports of candidates who claimed they were not given not enough time in their test centres to read the instructions fully.

And many others, of course, may not have read the instructions in great detail at all, even during the mocks and especially during the final exam.

But, if that number is greater than just in the hundreds, it could explain why many scores were lower.

And yet another candidate had told us they had clicked ‘mark for review’ on every question, out of habit from other testing platforms, which might explain the relatively high number of scores of exactly 0.

d) Clear responses / click to deselect?

Another potential reason for the low scores this year could be that candidates potentially did not change their answers in the way the system wanted them to, as we had reported previously.

Without navigating away from a question, a candidate might first have clicked a), reconsidered their answer, and might then have clicked b) immediately after.

On a very strict reading of the CLAT and the mock instructions, however, candidates were expected to either click ‘clear response’ or to first click a) and then click b).

It is still not entirely clear at the moment whether the system would have registered someone simply clicking on b) without ‘clearing’ a) first.

Candidates who just clicked on b), would not have received any visual or other confirmation or cue on-screen that they had done anything incorrectly, at least according to the mock exams.

e) Other?

...

The officially sanctioned workflow

In its press release responding to some of the complaints, on 3 October, the CLAT had explained that the instructions (see above) in both mock and final exam had:

clearly said ‘To deselect your chosen answer, click on the button of the chosen option again or click the Clear Response button.’ The candidates thus had an option either to click on the initially chosen option and change their option OR click on ‘clear response’ and give fresh option. For both MARKED FOR REVIEW as well as CLEAR RESPONSE, Audit trail recorded same response and thus no candidate was disadvantaged in anyway.

We have asked the CLAT for official confirmation on this process and whether only the strict reading of the instructions (i.e., deselecting the wrong answer first or using ‘clear response’) would have resulted in answer changes getting recorded.

If that is indeed the case, it might offer a likely explanation for why many candidates may have lost some points, or, in some cases, might have even lost a greater number of points (as some complaints seemed to suggest).

Since the exam was of a tough standard and conducted via a computer with a mouse, it is possible many candidates might have made repeated clicks while they pondered a correct answer, as they might commonly also expect to do on forms on the web.

This kind of behaviour, might, for instance, explain candidates such as the one the CLAT described as an example of its audit in its 3 October statement:

In fact, the Audit log showed that one candidate had changed the response as many six times and the system recorded the 6th and the final response. Thus the apprehension that earlier response was not changed has no basis.

That statement had been made after a more detailed forensic review of complaints, which had concluded:

The Expert Committee randomly examined the Audit Log of number of candidates who had raised objections to the response sheet, the Expert Committee did not find any discrepancy between the Clicks made by the candidate as recorded in the Audit Log and the Response Sheet.

But how come a few candidates did much, much better that most others?

If there were issues with the user interface or the instructions, how come some candidates performed exceedingly above the statistical averages and how come the scores at the top were distributed so widely?

One might assume that a very few candidates simply performed much better than the vast majority of other candidates because they were outliers and very very smart and well-prepared.

But if there were issues with how candidates understood the user interface, there are other possible theories. Is it possible that the candidates who performed the best were in a statistical minority and for whatever reason:

- never used the ‘mark for review’ functionality, or

- never or only rarely changed their minds over a question and immediately picked the right answer first, only clicking once, because that is how they take tests, or

- they were one of a minority that had read the instructions very well, interpreted them correctly and had used the software exactly as intended on all counts (or had been reminded to do so by their coaching institute), or

- experienced a combination of all of the above?

At present it is impossible to say with certainty what the right explanation is for the low scores, but the answer to this could end up explaining much of the mystery with the CLAT scores this year.

Social media had been abuzz with complaints when provisional answer sheets were first released to candidates, and newspapers had reported between 400, thousands, and up to 40,000 complaints about the 2020 CLAT, in the latter case citing an unnamed source.

The 40,000 figure certainly seemed high (suggesting that it might include multiple complaints per candidate over wrong answers to questions).

But when 39,900 candidates scored 44.5 points and below, it is not inconceivable that thousands of individual CLAT aspirants did actually complain about their low scores, whether deserved or not.

We have requested further comment and clarification from the CLAT consortium.

Update 13:19: A statement from the CLAT is included at the top of this story now.

As a reader, if you have any thoughts, useful data, or are amongst the top percentage points in the ranks or know someone who does, please do connect since it might help clear up some of the above issues: Contact Us.

threads most popular

thread most upvoted

comment newest

first oldest

first

bangaloremirror.indiatimes.com/bangalore/others/candidates-fume-as-clat-results-are-out/articleshow/78503507.cms?utm_source=contentofinterest&utm_medium=text&utm_campaign=cppst

@Kian: Troll spotted!

Lets say you are right so do you think any aspirant out there will love to have his/her chances of securing a seat in the top NLUs reduced for some political gimmick where less deserving candidates are given a better opportunity for the only reason that they were luck enough to be born in that state or have the opportunity to study there? By the so called sons of soil logic to extend domicile quota all the nlus will loose their somwhat national characteristic that they still have, why then even bother to call it 'national law univs'. The reason I pointed out the need for state funds is the fact that in all the nlus in return for domicile reservation they get more funds from state. I do not support this practice but if it does happen then NLSIU definitely should get the benefit. Why loose the 'national' tag for no benefit at all. As the the submissions in the supreme court the state provides a meagre 50 lac annually for an operating cost of 30 cr. I am lot of families in India will have near to this if not greater expenditure than 50 lacs.

Do you think that students whose parents have transferable jobs or who had to move to other parts of the country for some other reason dont deserve the chance to study in top colleges just because they dont belong to a particular state. After all these parents do pay the income tax. If one wants to see more interest and number of aspirants in the legal field as comoared to other fields , then these NLUs will need to be free from such populist gimmicks by state govts making them truly national. Useless reservations like the often misused NRI sponsorship quota in these colleges need to be done away with. Your merit and not you state or ability to pay should decide the college you get.

P. S : I secured a rank in clat, although it was lower than what I was expecting but again I have to be content with whatever I get because majority of the serious or even non serious students do not have the energy to fight with the consortoum and appear for a CLAT retest. If you think you could have done better then the 2021 clat is just 6 month away so you can appear for that. Let's not make this any worse than it already is.

timesofindia.indiatimes.com/articleshow/78502735.cms?utm_source=contentofinterest&utm_medium=text&utm_campaign=cppst

Lucknow's high rankers aim for career in judiciary

timesofindia.indiatimes.com/articleshow/78503391.cms?utm_source=contentofinterest&utm_medium=text&utm_campaign=cppst

Bhopal’s Debmalya gets 27th rank in CLAT 2020

timesofindia.indiatimes.com/city/bhopal/bhopals-debmalya-gets-27th-rank-in-clat-2020/articleshow/78501009.cms

1. Jindal

2. Symbi Pune

3. NMIMS

4. GGSIP

5. Nirma

6. Symbi Noida

7. Christ

8. Bennett

9. Symbi Hyderabad

10.GLC

Jindal (Prefer after GNLU)

Symbi Pune(Prefer after HNLU, RMLNLU, RGNUL)

GLC(same as above)

Nirma(Prefer over Lower NLUs like Assam, Trichy etc)

Symbi Noida

Symbi Hyderabad

GGSIP

Nmims

Christ

Bennett

Please give your suggestions and point out any mistake.

All India Rank score

1 177.25

7 173.25

10 172.25

50 165.75

137 161

305 155.75

546 150.75

882 145.75

793 147

996 144.5

1285 141.5

1535 139

1984 135.5

2241 133.5

10358 101.75

14300 92.75

15281 89

16728 87.25

19311 82

26244 69.75

27587 67.5

30310 63.25

34965 56

39179 49.5

43861 41.5

53989 18

55046 13.25

Law universities not following the law of the land.

Someone interested should file a case or something.

Kian maybe you can ask faizan about this ews thing and as for sudhir, maybe he likes to break laws.(just joking no hate towards anyone)

I couldn't read the instructions at the centre because the "invigilator" was shouting deliberately like, "now select next, now do this". Entering the exam hall late just because the long queue cost me a year. I had set 20 questions for review, which is entirely my fault. I'm not arguing. But being left with the questions I've marked accurately which is around 104, I got less than 30. I don't how, where I'd gone wrong.

I have no choice but to prepare for 2021. I don't know what will be the SC decision regarding this issue. But I beg for god sake make clat 2021 OFFLINE, can't deal with this issue anymore.

I'm exhausted.

2. Aadya Singh, Patna

3. Jai Singh Rathor, Patna

5. Anand Kumar, Patna

6. Shailja Beria, Kolkata (also LSAT rank 1)

10. Shantanu Bishnoi, Jaipur

14. Aman Patidar, MP

26 Virendra Kabra, Jaipur

27. Debmalya Biswas, Bhopal

31. Palak Kumar, Kolkata

35. Shikhar Sharma, Kolkata

48. Yashwant Kumar, Patna

57. Ashish Sharma, Lucknow

Please fill in the missing rankers.

consortiumofnlus.ac.in/clat-2020/

Names have been provided in this list, rank wise.

Anyways what is in the name of people going to NLSIU ? Anything special?

1. Aprajita, Bikaner

28. Vedant Bisht, Krishnanagar, West Bengal

50. Aditya Sarma, Bhubaneshwar

In fact, Kolkata is the only major city with some high rankers. But ironically most are not Bengali, while rankers from other cities are Bengali but will not make it to NUJS as they are squeezed out by the domicile quota! What an irony!

See video at 8:30.

It's good to see that "small town Kota types" are cracking the exams. The days of NLS (which I'm familiar with) being an elite bastion of IAS kids and the posh metro schools should become a thing of the past.

minus 4 ( - 4 ). So much for CLAT 2020 being a fair process. If some students want to approach court , I don't think it should be anybody's problem.

Tier 1:

1. NLSIU Bengaluru

2. NALSAR Hyderabad

3. NUJS Kolkata

4. NLU Jodhpur

5. NLIU Bhopal

6. NLU Delhi

Tier 2:

7. MNLU Mumbai

8. GNLU Gandhinagar

9. HNLU Raipur

10. RMLNLU Lucknow

11. RGNUL Patiala

12. Symbiosis Pune

13. GLC Mumbai

14. ILS Pune

15. JGLS Sonipat

16. NMIMS Mumbai

Tier 3:

17. NUALS Kochi

18. CNLU Patna

19. NLUO Cuttack

20. NUSRL Ranchi

21. TNNLS Trichy

22. NLUJA Gauhati

23. DSNLU Vishakapatnam

24. MNLU Nagpur

25. Symbiosis Noida

26. KIIT Bhubaneshwar

27. BHU Varanasi

28. Symbiosis Hyderabad

29. AMU Aligarh

30. Amity Noida

31. Christ Bengaluru

32. Nirma Gandhinagar

33. Jami Delhi

34. USLLS Delhi

NLU Shimla/Aurangabad/Jabalpur/Sonipat are in the initial years of operation. Hence we have not included them in this ranking

www.imsindia.com/LAW/top-colleges.html

www.imsindia.com/LAW/blog/nlu-ranking-top-law-colleges-india/

20. Debdutya Saha, Kolkata

23. Gunjan Modi, Bengaluru

timesofindia.indiatimes.com/city/jaipur/pink-city-turning-into-a-coaching-hub-for-clat/articleshow/78523636.cms

threads most popular

thread most upvoted

comment newest

first oldest

first