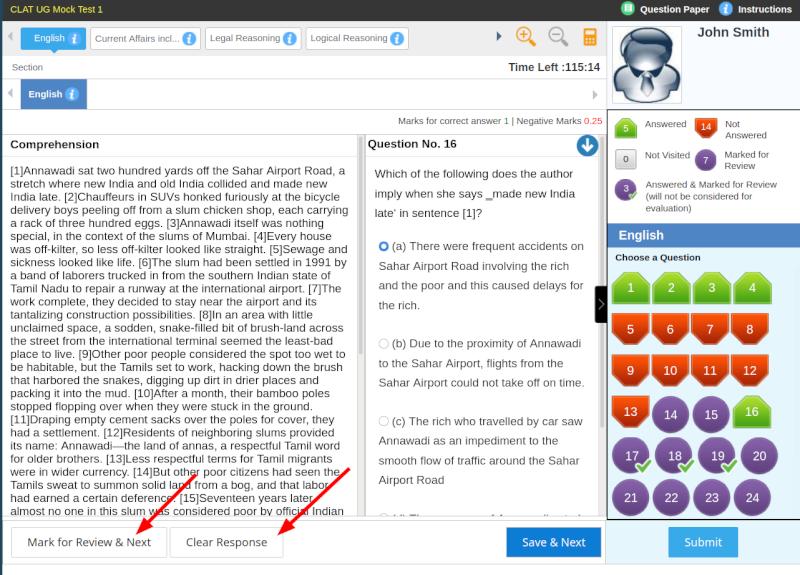

Potential user experience (UX) issues in the design of the computerised Common Law Admission Test (CLAT) exam 2020 may have caused issues for potentially hundreds of candidates or more.

A flood of vocal complaints from candidates on social media today (1 October) - after provisional scores were released to candidates the previous day (30 September) - had alleged that their scores did not correspond to the questions they had actually answered, with some claiming a discrepancy of 30 or more marks.

Many also claimed that they had received zero marks for many questions that they had answered (correctly).

We have also spoken to more around a dozen candidates and while it is impossible to authoritatively confirm at this point whether this applies to all candidates’ complaints of technical issues, it appears that many of the complaints were caused by the exam’s potentially counter-intuitive and non-standard system of implementing the “mark for review” feature.

In the provisional score software that had been made accessible to candidates, this might have indicated that a candidates had “chosen option” but the status was “Marked For Review” and they received 0 marks for the question (see screenshot below).

We had first reported the potential issue with 'mark for review' on the day of the exam (28 September).

We had written back then:

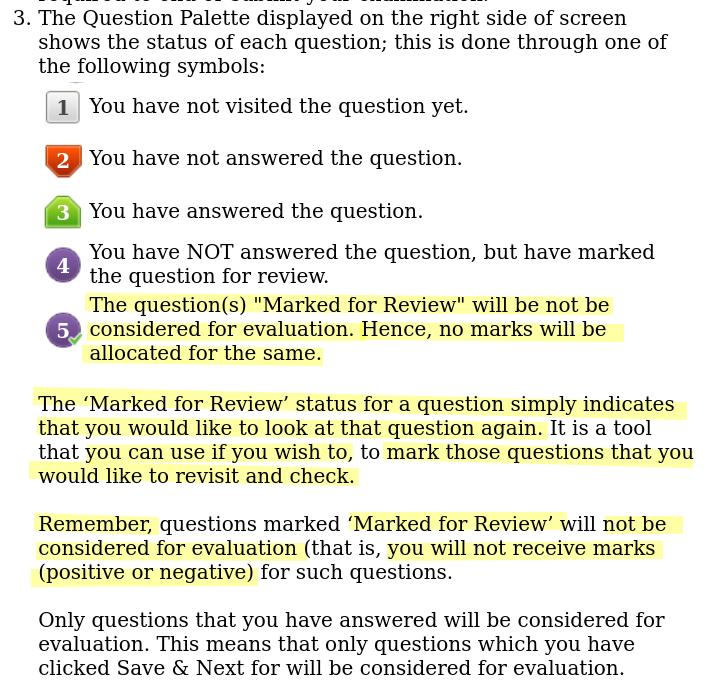

Apparently, the exam’s instructions today explicitly noted that any questions “marked for review”, in order to bookmark these and return to them later, would not be counted (even if the candidate had given an answer before clicking the ‘mark for review & next question’ button).

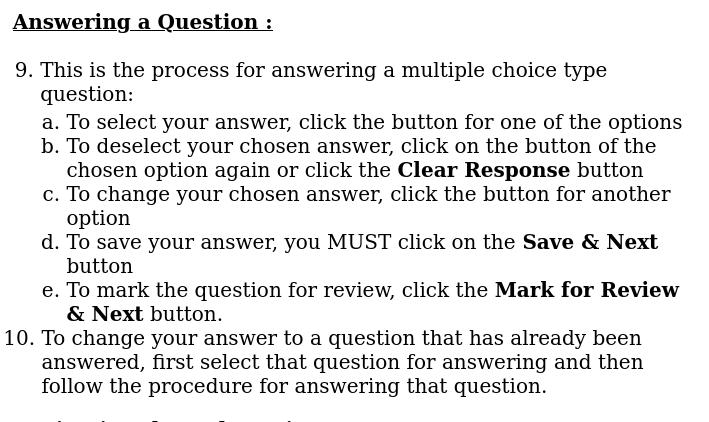

Furthermore, we understand that when returning to answers that had been ‘marked for review’, candidates were also required to first click a button marked ‘clear response’ before clicking on the new answer and submitting it.

If ‘clear response’ was not clicked before submitting the new answer, the system may not have registered the new answer in the way that had arguably been intended by the candidate.

We have tried out the mock test just now, which did appear to accept (and remember) new answers to questions ‘marked for review’, even without pressing the ‘clear response’ button.

We have not been able to confirm whether that was communicated or clarified on screen differently in the final exam.

Update 01:06: As pointed out in a comment below and by several candidates to us directly, it is possible that once you have clicked on an answer and then immediately clicked on another answer (without submitting the answer and without clicking ‘mark for review’), the software may have counter-intuitively frozen the first answer as the one that counts (unless you first clicked ‘clear response’). The instructions are not 100% clear on this (see below), and we have not been able to independently confirm that this is the way the software worked, but have reached out to the CLAT for comment.

Online vs pen and paper

But there is a case to be made, from a user and usability perspective, that the system should also have accepted a click on the big blue “Save & Next” button instead of first requiring a click on a “Clear Response” button.

Likewise, it might be reasonable to assume that a candidate takes a good stab at an answer, saves it and clicks ‘Mark for Review’, hoping to get back to the question later to double check it, and then runs out of time.

Much as for a traditional pen and paper exam, the candidate might reasonably have thought that at least their original answer should count.

In fact, if you used the ‘mark for review’ button as intended, it might be a severe detriment: considering the time pressure, you are unlikely to have the time to actually be able to go back to more than a few questions, since unlike a pen and paper exam that you can quickly skim, going back to each question entails a click on some small icons, which could take a not negligible amount of extra time).

A question of instructions?

While we do not have a copy of the instructions provided to candidates in the exam, we have been able to confirm the instructions provided to candidates in the mock exam (see a full PDF of the instructions below, coming to around three pages of text).

Update 11:04: We have been told from an authoritative source close to the CLAT that the instructions in the final exam were identical to the below instructions given in the mock (except for having removed reference to the ‘calculator’ option).

As the excerpt from the mock exam above illustrates, the mark for review issue was definitely raised in the instructions and (fairly) clearly spelled out that the “mark for review” button would result in zero points if not returned to later.

However, the second part regarding the ‘clear response’ button was expressed less clearly in the ninth and tenth out of the 14 points of instructions provided to candidates (see screenshot below).

While on a careful reading points 3, 9 and 10 together, they do seem to strongly imply that you need to click the ‘clear response’ button to change your answer.

However, this could arguably have been stated much more clearly, such as by:

- including reference to the ‘clear response’ button in the third point, which deals with ‘mark for review’, or

- mentioning ‘mark for review’ again under point 10, preferably in bold.

In any case, it’s fair to say that instructions are fairly confusing.

On the other hand, you could argue that the language and instructions are no more obtuse than that used in terms and conditions, contracts or judgments that the budding lawyers may read one day.

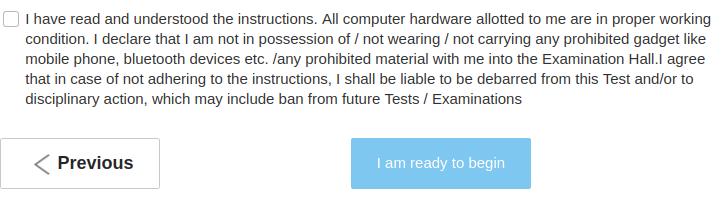

And the mock exam (much like Facebook or other websites with long T&Cs that no one ever reads), also included a very unequivocal checkbox, stating: “I have read and understood the instructions.” (see screenshot below).

An early reading comp section?

While it is possible to chalk this up to a reading comprehension fail by candidates, it is also potentially a fairly understandable one for those used to other competitive online exams, most of which do not follow this kind of format requiring multiple button clicks.

On top of that, the stressful physical exam was held in the midst of a global pandemic. This could have brought with it additional issues that could have negatively affected candidates’ ability to properly parse the instructions before the clock officially started ticking on the exam.

One candidate told us: “In the actual exam, I marked all options for review out of habit. And as I was given a 13:45 reporting time, I didn’t get much time to actually read the instructions; neither did the people at the centre tell us about this.”

“This was unique to CLAT; no other exam does this kind of weird stuff,” they added.

CLAT consortium: Experts to respond by 3 October

We have reached out to CLAT consortium member and Nalsar Hyderabad vice-chancellor (VC) Prof Faizan Mustafa with the above issues.

(Mustafa had taken over the day-to-day management of the CLAT after the 3 September ‘Claxit’ of NLSIU Bangalore, whose VC and CLAT secretary Prof Sudhir Krishnaswamy had been handling the majority of the CLAT’s technical preparations and mock exams until then.)

Mustafa explained, on behalf of the consortium: “We have received many objections. The objections are being examined by the expert committee.”

He said that this expert committee would deliver its report by 3 October to the consortium’s executive committee and possibly also the governing body, and that thereafter the final answer key and (potentially revised) score sheets would be uploaded.

“The online exam has an inbuilt system of generating an audit trail of each and every candidate, which is the most authentic proof of what they really did,” Mustafa noted. “We are examining the audit trail of some of the candidates who have raised objections about the response sheets.”

“But what is the expert opinion of these sheets, we will know once the expert committee [makes its report on 3 October],” added Mustafa.

Potential errors in answer key, TBC

As in nearly every CLAT year, we understand that candidates have raised issues about at least 10 questions in the answer sheet may have had wrong model answers or potentially multiple correct answers.

We have not yet been able to check all those in detail and it is likely that only a smaller number of those complaints will eventually be accepted.

“The expert committee that will recommend on withdrawal of questions will be ready by 3rd [October], when these will be placed before the executive committee [of the CLAT], and most likely the governing body, and thereafter the final answer key will be uploaded,” said Mustafa.

Auditing the 'mark for review' audit trail

- Would it be fair to penalise candidates who did not read or follow the instructions properly?

- Would it be fair to ‘reward’ or make exceptions for candidates who technically did not follow instructions properly, by awarding them points on the basis of how they thought the exam software worked?

- What about candidates who may not have had time in the exam hall to read the instructions due to pandemic or other logistical issues? And is it possible to even reconstruct if a candidate had faced such issues?

- Does having such instructions for using the exam software potentially disadvantage those from less privileged backgrounds, some of whom may have never used a computer and full web-browser before?

- Alternatively, does it perhaps disadvantage those who have taken multiple other competitive exams that used similar systems and assumed the CLAT software would have worked similarly?

- Finally, would the decision to make an exception potentially disadvantage those candidates who did follow the instructions properly?

Much like the 2020 CLAT, which was widely reported to be of a higher difficulty than the mock exams, there are no easy answers here.

threads most popular

thread most upvoted

comment newest

first oldest

first

The consortium should award and deduct marks on questions marked for review and should similarly take the option ultimately chosen as final irrespective of exam guidelines as the candidates were making those decisions on the basis of logic and prior experience.

My advice to these students is to stop causing trouble and face reality. If you do not get into an NLU there are many options: JGLS, Symbi, Amity, GLC, Christ etc.

At any rate, for all their issues with access and diversity, at least private law schools can properly conduct entrance exams.

The consortium had a few extra months and still made a hash of it.

Also, by the way, one does not ‘pass’ CLAT.

*don’t want to

Cheers!

While I am under a legitimate expectation that I have changed it and marked it as D and saved it the software has apparently frozen A.

9(c) is specifically for changing options

And 9(b) is just for deselecting.

9(b) and 9(c) should have been clubbed and an "and" should have been there.

www.legallyindia.com/lawschools/india-today-law-school-rankings-2016-are-out-blablabla-lol-why-no-one-should-care-etc-20160523-7630

You have given a very apt summary of the issues and indeed , these are genuine issues. Software was counter-intuitive [...]

This is a systemic issue. Afterall, this happens every year. Despite charging a hefty 4000 for a 2 hour paper, they cannot make an error free paper. I think, they don't spend even Rs. 4000(single student's fee) on making an error free paper.

Fact is that CLAT consortium is not an expert in conducting exams. It screws up every single year. It would be much better if the responsibility for conducting the exam is given to NTA, which has better record and processes. Maybe it will also make the exam cheaper and less exclusionary.

Srikrishnarpanamastu.

Also, don't say "WeWantClatretest".

Not all of us are a part of that bandwagon.

Thank you so much, Mr. Gantz for highlighting this issue.

I rightly so remember that I had attempted all the questions and that my score is well above what I have been given. Please don't make rather bold comments, some students will genuinely take it to heart and make hasty decisions. If you don't agree with the discrepancies being true I request you to have patience till further notice by the consortium.

And yes I'm sure there are a lot many private colleges around which are better or the same as NLUs but don't you think that is asking for many students to accept their mere luck or fate ? To think that I , along with with many others have put in hard work for more than 2 years and to be dismissed due to errors on the side of the management is very tiring and stressful on the minds of the students.

law.careers360.com/colleges/ranking.

Firstly, the mock stimulation of the clat accorded you marks for answers changed without clicking "clear response". Secondly, it is quiet confusing to infer that the "clear response" option requires to be used for all options, even the ones you change through due course of the exam after properly clicking "save and next". Thirdly, it is common exam nomenclature that "clear response" is what you use when you click an option but later do not want to attempt since you're doubtful. And thereby, you "clear that response" and leave the question unattempted. AND THE MOST IMPORTANT point to be noted sir is, the most recent option you save is the one that is displayed on the screen. So, how are students expected to know what the system accepts and what it doesn't. So say, i were to click a, and then click on b and then SAVING b and moving on to the next question, only then would the box turn green. This would in any conventional exam mean that, the most recently saved ans choice was the ans wished to be saved.

Jab exam karwane ki aukaat nahi online toh karwaya kyu

Mujhe 2 baje system allot hua

2 baje se paper start tha

Kaha hoga kiske pass time instructions padhne ka.

Mai sabhi nlu's ke vc's ko challenge karta hu kisi me dam ho toh 10sec ne pura instructions padh le.

4500rs liye hai koi free me nahi de rahe exam.

Consortium should give chances to the students, who have faced problems

They have many time postponed exam for longer days, resulting in NLAT. Aspirants were under stress due to this and in confusion mind. Instead of simplifying the exam system, consortium added further complication by deviating from other on line tests where aspirants are comfortable with. Consortium says they have in built Audit trail system to check the complaints. How you convince the aspirants with your system checking when they dont have the choice to inspect/observe the same. They have to agree with whatever you say without their involement in checking.?

With this problem aspirants who scored less may be appealing and who scored more may be keeping quite. How you will convince both?

In wIchevr case one view this CLAT 2020 is a failure.

NLU grads can go to any extent to justify the consortium's fault. There is something called legitimate expectation, tomorrow they will say that cross is tick and tick is cross and you will say that its justifiable because its mentioned in the instructions?

However, I believe the larger problem is that when a student marks option A and then either immediately switches to b or comes back and switches to B, the system doesn’t record that unless the clear option is used. This was not mentioned in the instructions at all.

It’s sad to see the insensitivity in some of these comments, claiming that people who are raising these issues are merely disappointed by their poor performance. There are hardworking students who have lost 7-10 marks because of this clear option issue and that is not acceptable for an exam that is already so competitive.

YEAH RIGHT GO TO THE COURT OF LAW. :)

The NLS entrance exam, for all its follies, impacts less students, because if you don't get in to NLS because of a crappy entrance test, you've still got other affordable quality options. It was also for 500 rupees!

CLAT actively undermines the ability to exercise these choices by filing proxy writ petitions against exams like the NLAT, and then does a distastrous job in conducting its own exam (historically, this happens every single year).

When the common entrance test was first ordered by the SC, the individual exams were particularly expensive, and not online. If 8 different colleges were to do 500 rupee exams (like NLAT), that's got to be better than 1 CLAT (because you're not screwed by just having one bad day, and you're not screwed just because one set of administrators didn't care enough to set a decent test)

NLS, NALSAR, NUJS, NLUJ, should really get out of the CLAT and do their own exam. Do it now, for next year, so none of the legitimate expectation arguments arise. Do it with the relevant permissions of the Academic and Executive Councils, and then let's see how the CLAT defends its existence.

%

Option 1: Retest IF you lost a place in an NLU because of this.

Option 2: Fee refund IF you lost a place in an NLU because of this.

Otherwise you can to to JGLS, Symbiosis or Amity.

Idk how they're gonna do it. I didn't get paid for it [...]. When I paid for it I expect fair correction. FYI my elder brother studied in NLSIU so don't go on telling me how it works around there. Buddy keep it going :) [...]

CLAT papers. The paper was entirely FINE.

THE ACTUAL PROBLEM

The students who have secured good marks as per their "expectation" won't obviously say anything against it because they are legit "SAFE&SECURE" with their marks. So when the problem isn't about you, you are obviously not going to do anything about it. Had it been you guys in the other way, imagine some random person came up to and said you don't deserve it. The hypocrisy - ugh nobody wants it.

Dude even I don't have the intention to re-write the test neither do I want to.

Stop comparing CLAT to other exams.

I don't support the people who are hurling abuses at the respected professors and Institutions which have been est. long back have a great amount of respect for them. Neither did I do it.

I only want the people who deserve to get the actual marks get it. Also it would help people if you guys could legit stop generalizing everything to your whims and fantasies. Just because your happy with your marks doesn't mean you let others not speak up of the wrong being done.

Now don't go on and say "Had I been in your place I would have accepted my fate and let go and try for Private institutions blah blah blah.." We all know that's not true, never would have been.

I'm not going to let some random 6 people tell me what work I had put in and if I deserve it or not. I'm sure neither of you guys want to hear that. So please let people get marks for what they have written and not some gross error due to technology.

Do I blame the consortium for this? To an extent yeah I do, but not the kind that I'd go swear on them, so people don't do that either. not gonna help us.

twitter.com/YashJasuja4/status/1311676413112590338

twitter.com/AdiSadashiv/status/1311682262283427848

twitter.com/SharviiS/status/1311754968232153088

twitter.com/niranjan253707/status/1311856572520259584

twitter.com/oyekoki/status/1312080396960505856

twitter.com/Meenakshi_2002/status/1312028329130692609

Consortium says they have checked 150 complaints and they have not found any mistakes. Who witnessed this? Your self? Is this transparency? Without involvement of complainants.

You say the audit trail will be submitted to court.you r instigating aspirants to go to court. Is it possible to all aspirants to go to court. You are taking advantage of your position where public money will be utilised to fight your case.

Instead, why cant you send audit trail to those who complained? Will it not clear their doubts or is there anything to hide.

Instead of solving the problem in a transparent way, consortium is busy praising each other just to satisfies their ego. This is like parents telling my child is only supreme. Which child is supreme will be decided by the society.

CLAT 2020 is a failure with lots of doubts left. Mainly due to wrong questions and answers and due to confusion prevailed in instructions to aspirants and non clarity in clauses.

At least CLAT 2021 on wards should be paper based as consortium is failing every time when on line tests conducted. CLAT 2019 was very much successful.

It is widely oberved by all that all is not well in consortium which resulted in NLAT and so many present and previous players locked their horn. If these ego clashes are not contained and tests are conducted like this surely in future each NLU's will opt out of consortium.

.

He was running the exam until 3 September, selected vendor, chose platform, coordinated mocks etc. After he abandoned all norms of professionalism (and legal norms as per the Supreme Court of India) by quitting CLAT without any notice or warning the other VCs had to take over from him at the last minute while he was busy organising the clusterf*ck of NLAT and didn't even lift a finger to help the CLAT anymore other than wasting their time in the court and causing stress to aspirants.

This is the charitable interpretation. If someone "happy to attack Sudhir", whose honour you are defending even though no one asked, they would accuse Sudhir of having taken his actions on purpose to sabotage the CLAT right from the start and to ensure he could get away scot-free started the NLAT and leave them with the CLAT mess he caused to disadvantage other NLUs against NLS.

If anything, Sudhir and his team had been so devoted to CLAT revamp, that they obviously came out with a sub-par test for NLAT at short notice. So, this bungled CLAT 2020 is the sole responsibility of the TLC-VCs currently running the show.

If Sudhir organised a sub-par test for NLAT, it was not because he was devoted to CLAT but because the "short notice" he gave himself to try and avoid any legal challenges was only 10 days. Unless you are alleging he had been preparing for the NLAT in secret for several months while also preparing the CLAT, which explains the shoddy job on both exams.

www.newindianexpress.com/states/karnataka/2020/oct/04/convocation-fees-nlsiu-students-cry-foul-2205583.html

Bas ek hi sapna

Messiah ka naam japna

Aur student ka dhan aapna!

Cases in point: 50% NLAT money, poof! Robbing students by deducting fees for a convo that is never taking place. Taking away institutional scholarships for poor students. Messiah is sitting on Facebook oversight board, but who is sitting on the board having oversight over his actions? It seems funny that despite Facebook being hauled up for one ethical violation and another, they have found one man for the oversight board who himself is getting a reputation for his admin lapses.

threads most popular

thread most upvoted

comment newest

first oldest

first